|

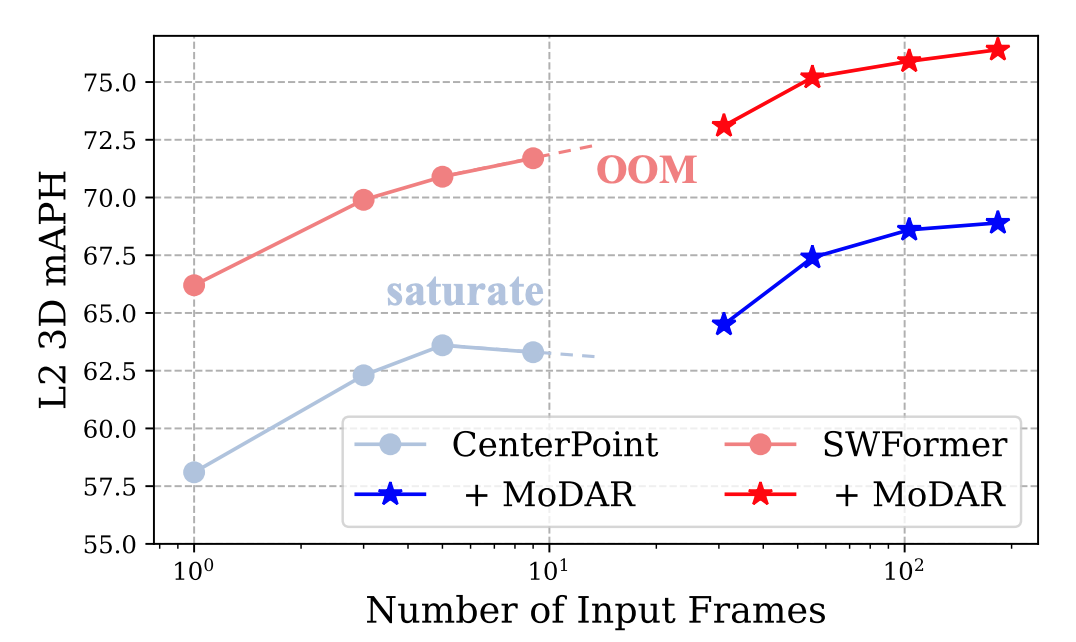

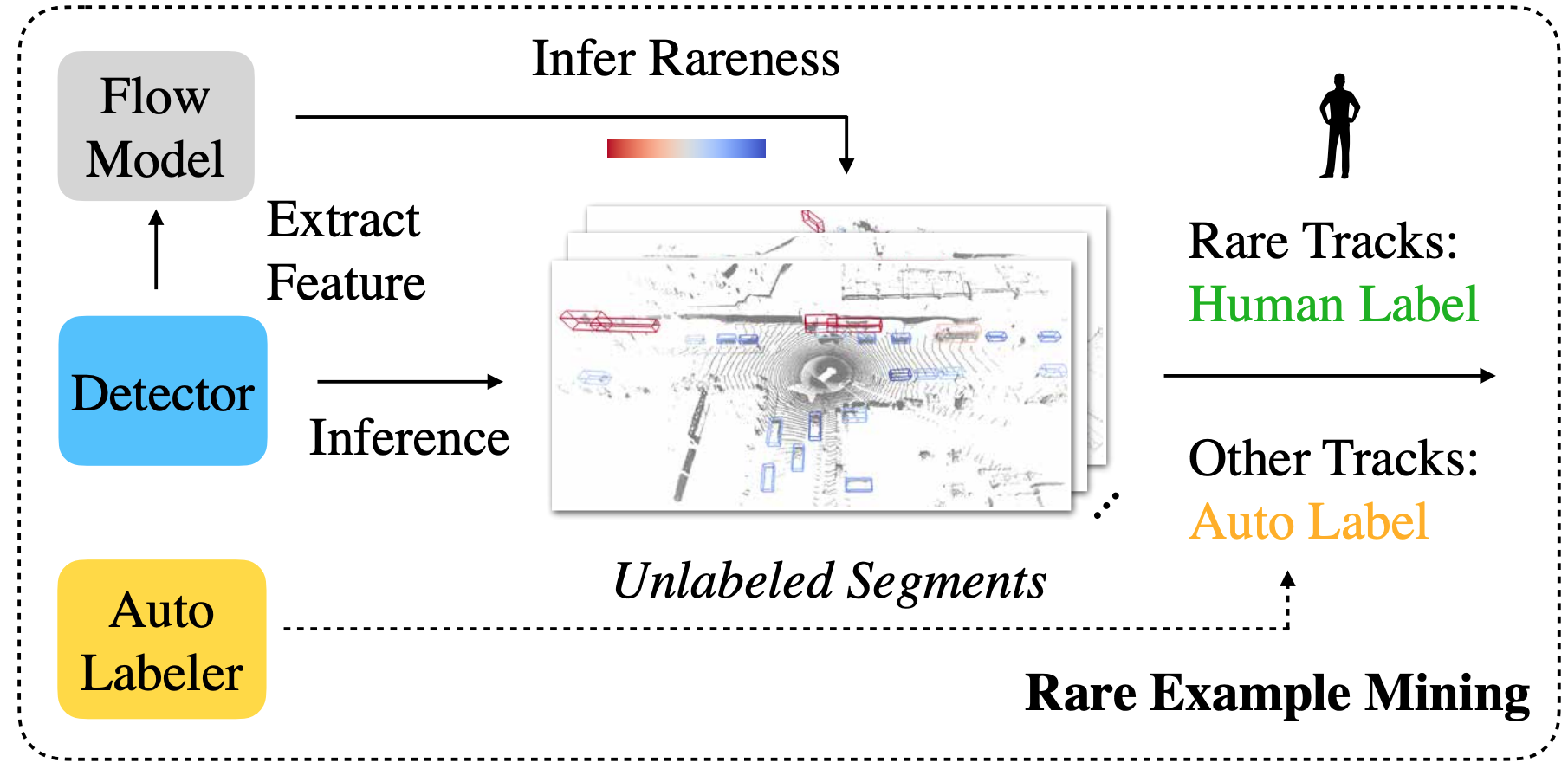

Mahyar Najibi, Jingwei Ji, Yin Zhou, Charles R. Qi, Xinchen Yan, Scott Ettinger, Dragomir Anguelov

ICCV 2023

paper / bibtex

|

|

Yingwei Li*, Charles R. Qi*, Yin Zhou, Chenxi Liu, Dragomir Anguelov (*: equal contribution)

CVPR 2023

paper / bibtex

|

|

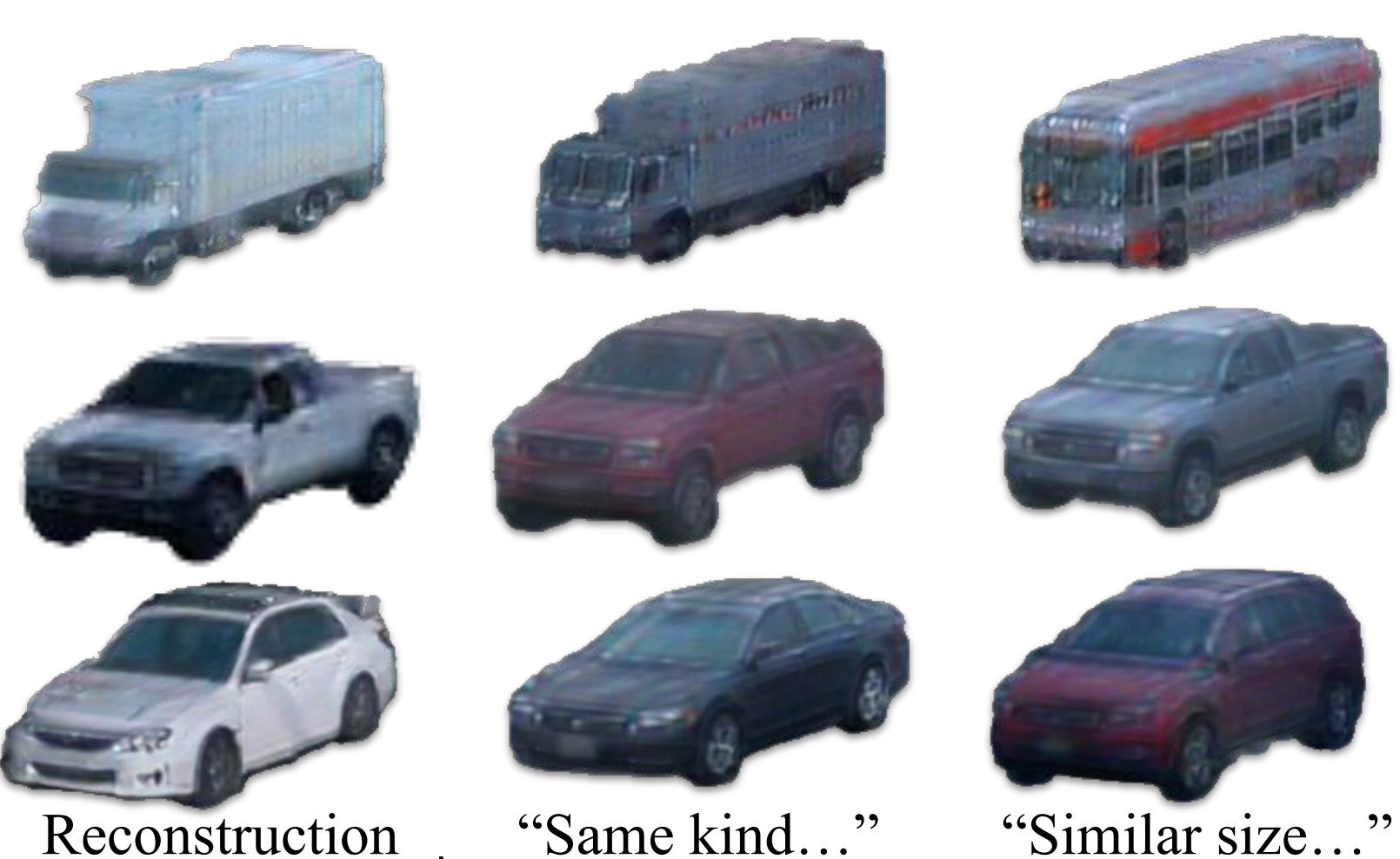

Bokui Shen, Xinchen Yan, Charles R. Qi, Mahyar Najibi, Boyang Deng, Leonidas Guibas, Yin Zhou, Dragomir Anguelov

CVPR 2023

paper / bibtex / dataset

|

|

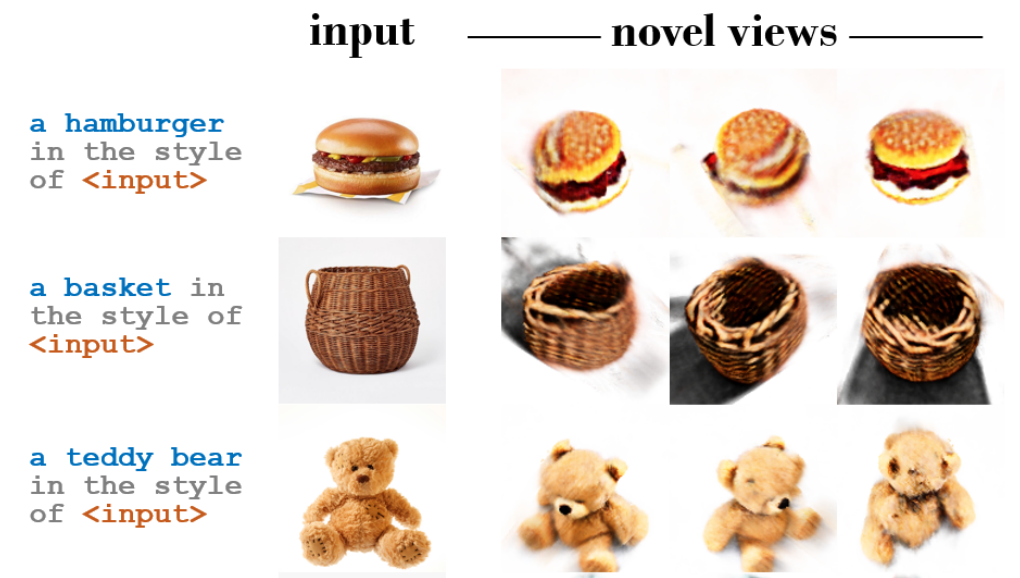

Congyue Deng, Chiyu Max Jiang, Charles R. Qi, Xinchen Yan, Yin Zhou, Leonidas Guibas, Dragomir Anguelov

CVPR 2023

paper / bibtex

|

|

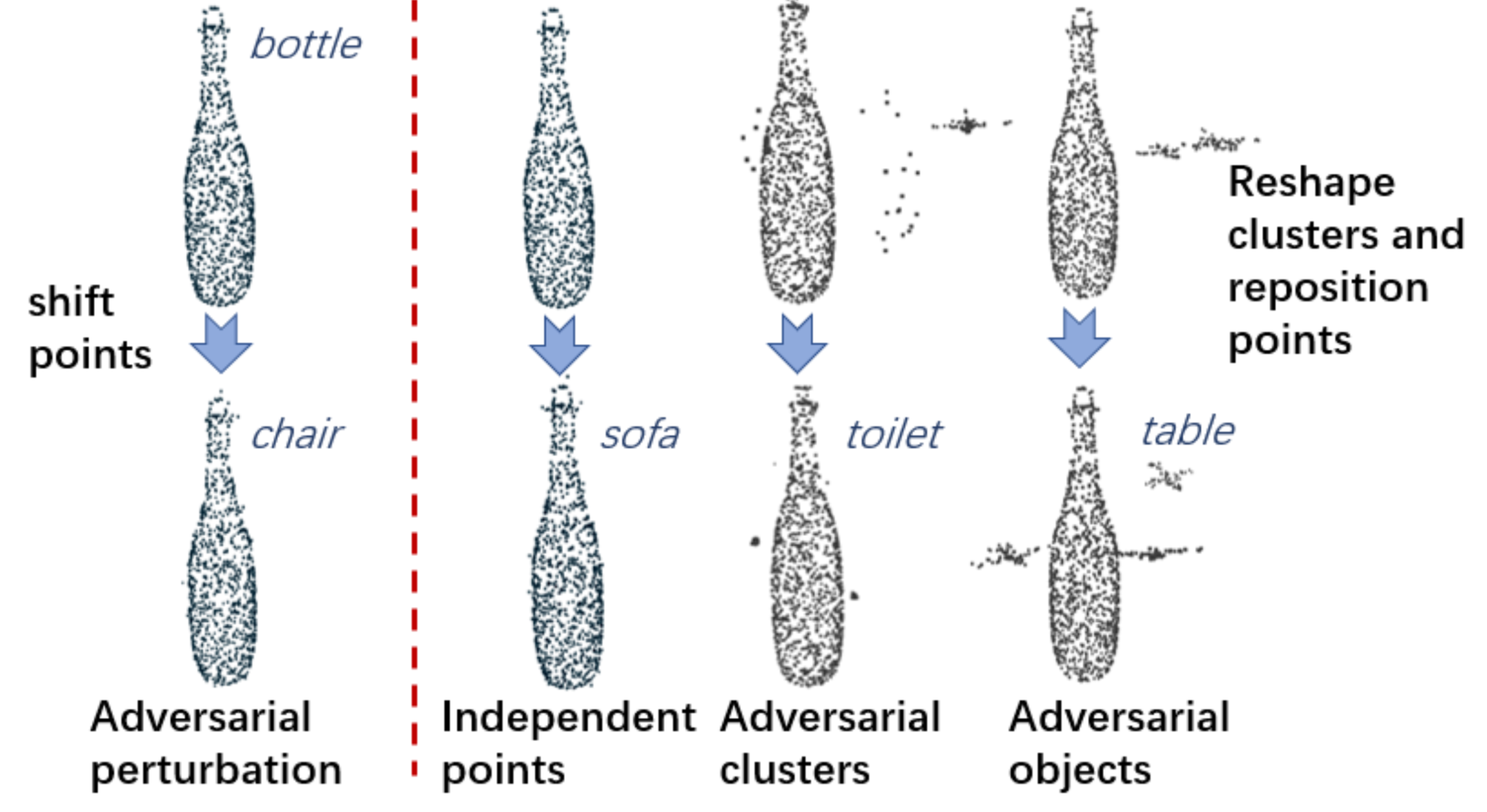

Minghua Liu, Yin Zhou, Charles R. Qi, Boqing Gong, Hao Su, Dragomir Anguelov

ECCV 2022, Oral Presentation

paper / bibtex

|

|

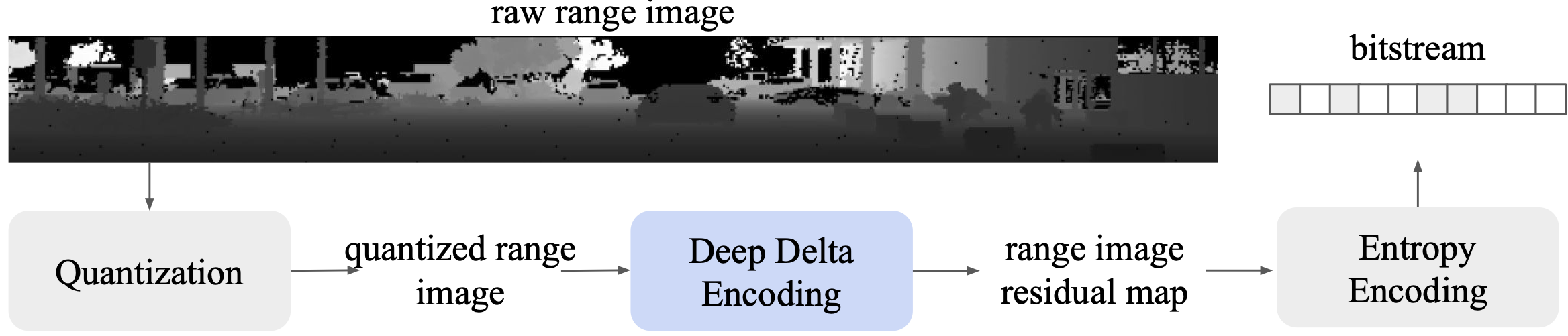

Chiyu (Max) Jiang, Mahyar Najibi, Charles R. Qi, Yin Zhou, Dragomir Anguelov

ECCV 2022

paper / bibtex

|

|

Xuanyu Zhou*, Charles R. Qi*, Yin Zhou, Dragomir Anguelov (*: equal contribution)

CVPR 2022

paper / bibtex

|

|

Boyang Deng, Charles R. Qi, Mahyar Najibi, Thomas Funkhouser, Yin Zhou, Dragomir Anguelov

Neurips 2021

paper / bibtex

|

|

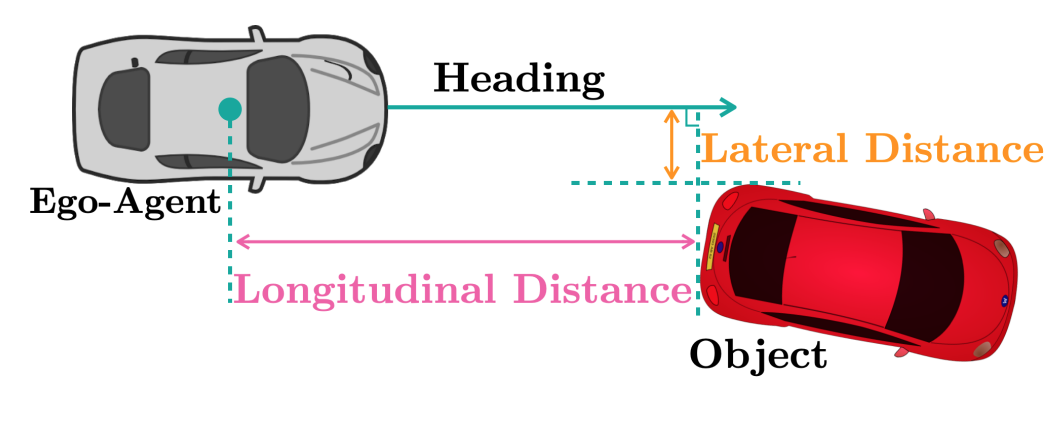

S. Ettinger, S. Cheng, B. Caine, C. Liu, H. Zhao, S. Pradhan, Y. Chai, B. Sapp, Charles R. Qi, Y. Zhou, Z. Yang, A. Chouard, P. Sun, J. Ngiam, V. Vasudevan, A. McCauley, J. Shlens, D. Anguelov

ICCV 2021

paper / dataset / bibtex

|

|

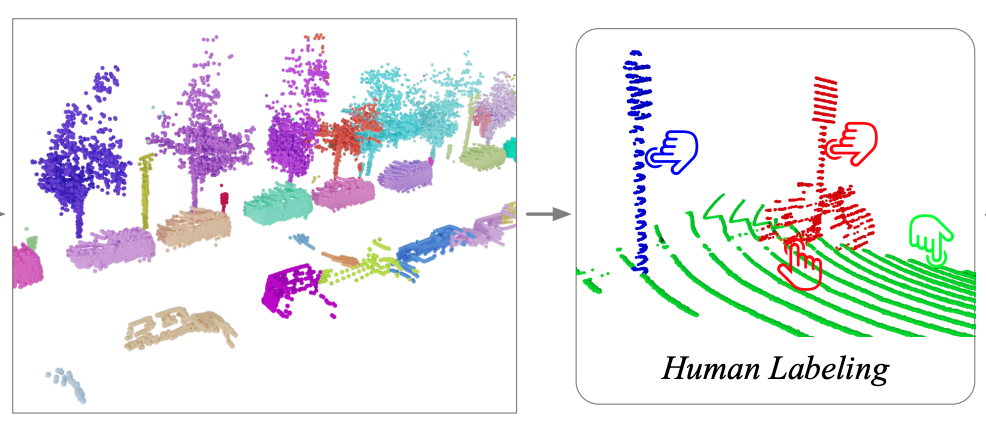

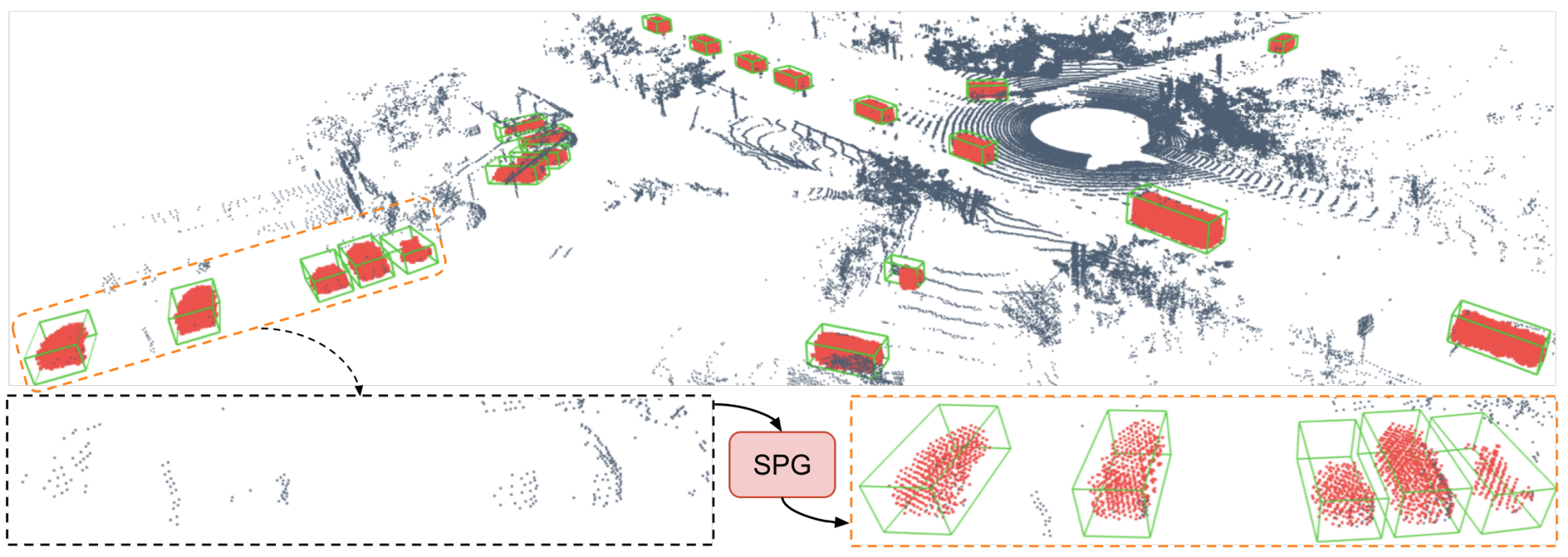

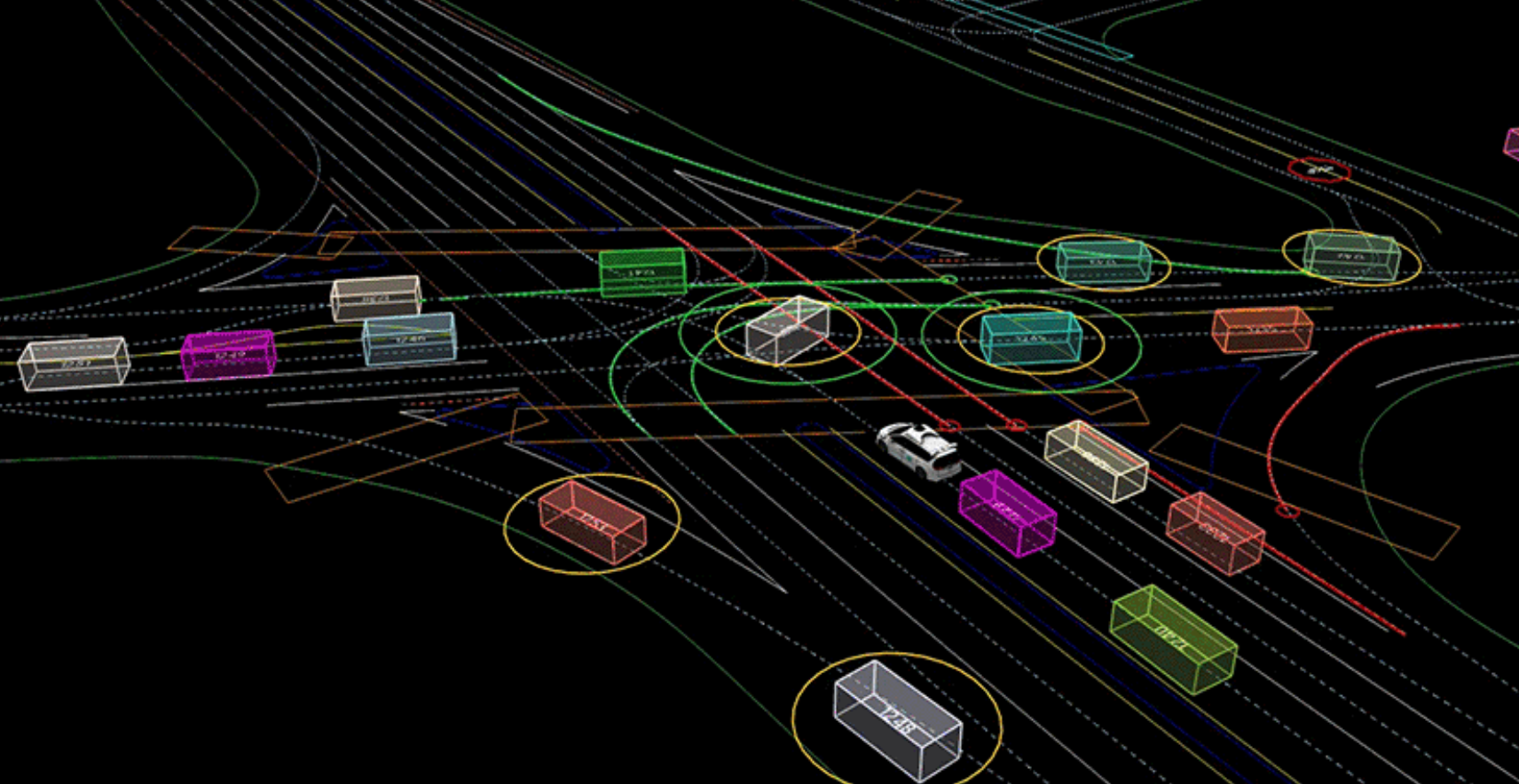

Charles R. Qi, Yin Zhou, Mahyar Najibi, Pei Sun, Khoa Vo, Boyang Deng, Dragomir Anguelov

CVPR 2021

paper / blog post / bibtex / talk

|

|

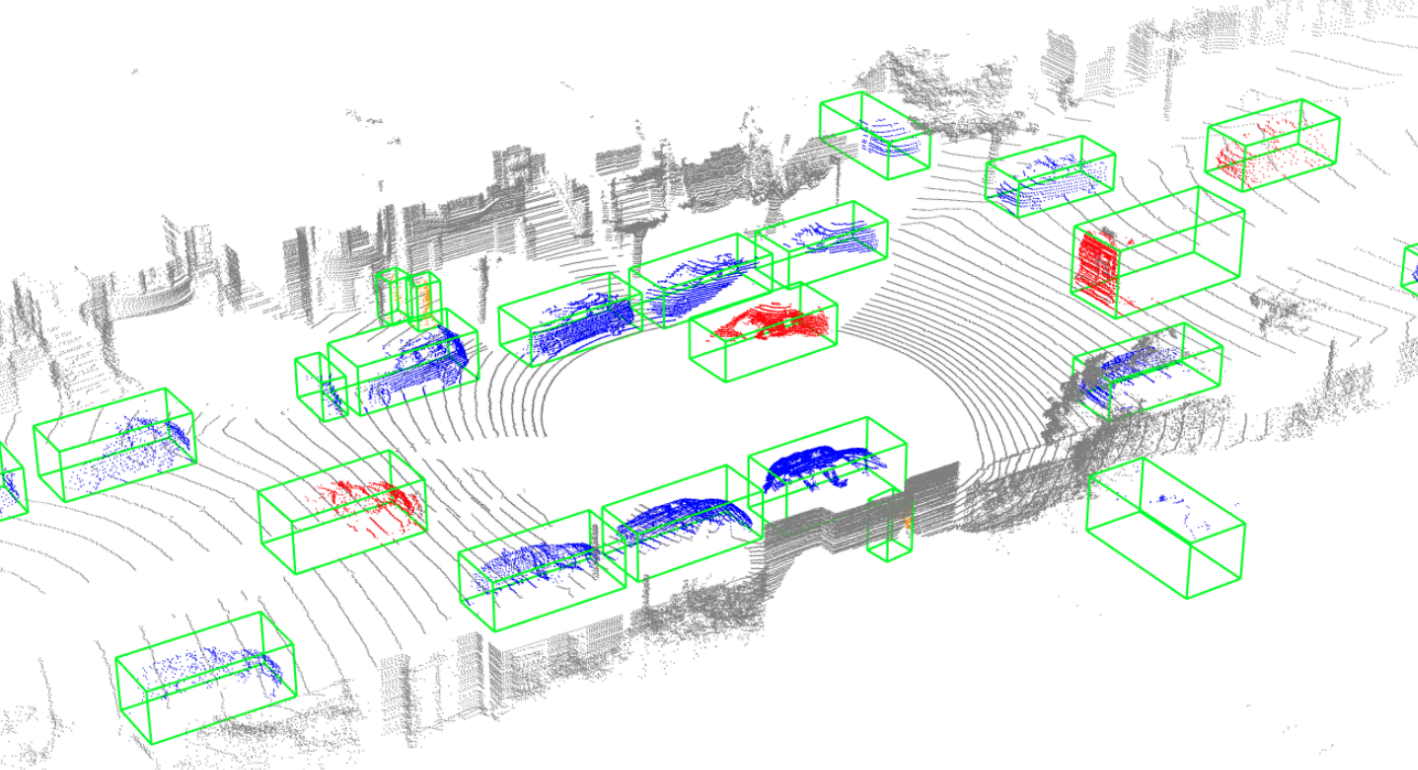

Saining Xie, Jiatao Gu, Demi Guo, Charles R. Qi, Leonidas J. Guibas, Or Litany

ECCV 2020, Spotlight

paper / code / bibtex

|

|

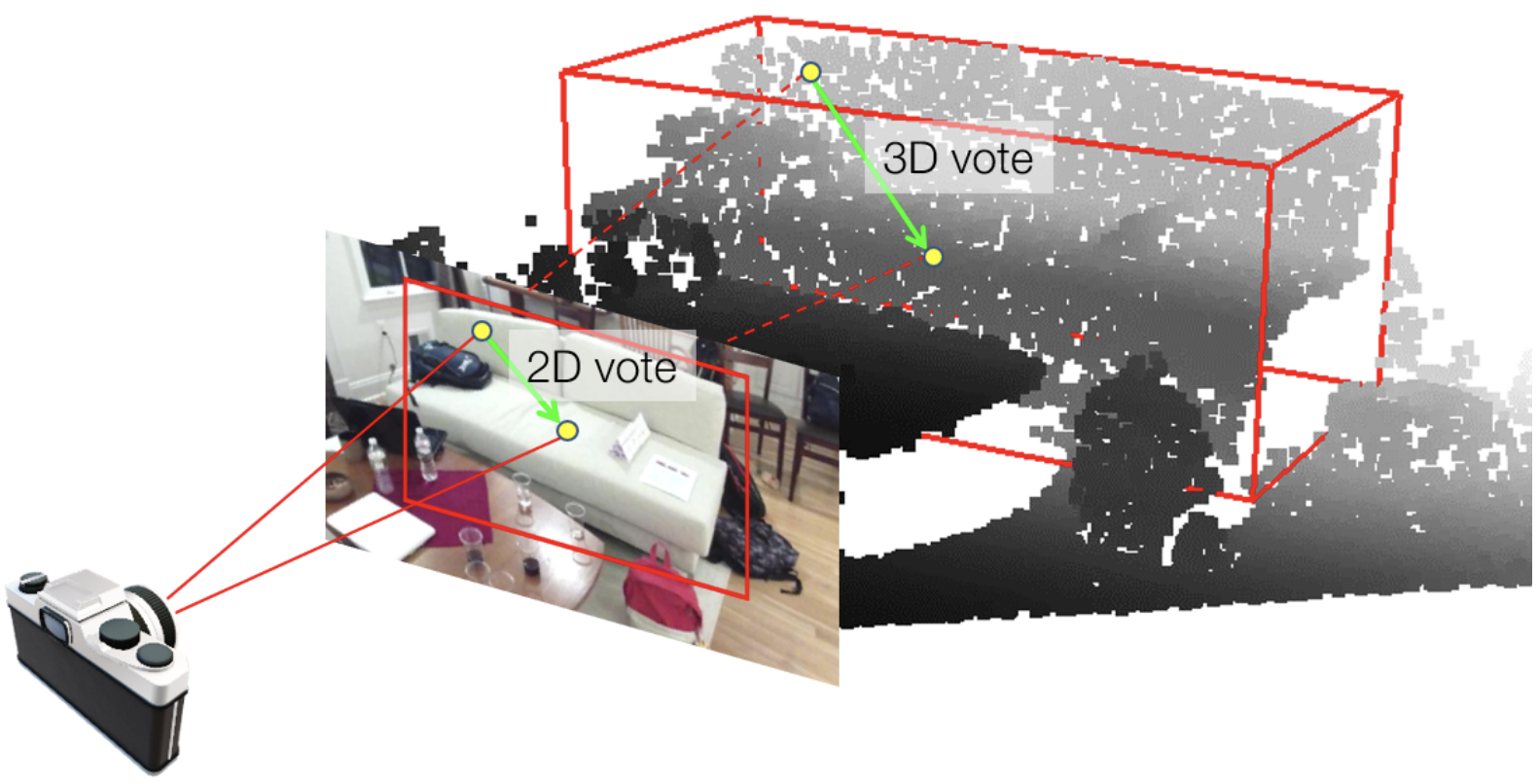

Charles R. Qi*, Xinlei Chen*, Or Litany, Leonidas J. Guibas (*: equal contribution)

CVPR 2020

paper / bibtex / code

|

|

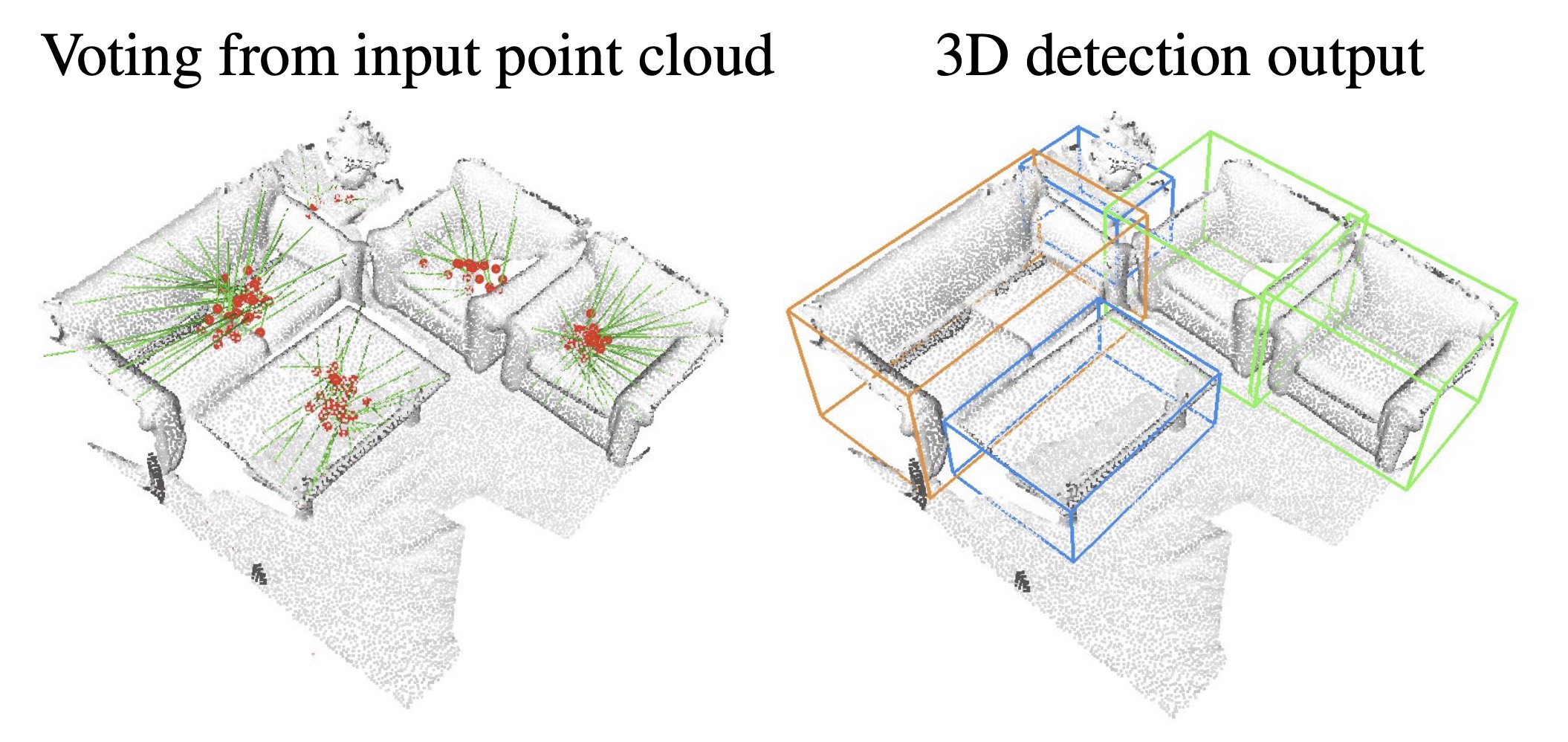

Charles R. Qi, Or Litany, Kaiming He, Leonidas J. Guibas

ICCV 2019, Oral Presentation,

Best Paper Award Nomination (one of the seven among 1,075 accepted papers) [link] paper / bibtex / code / talk |

|

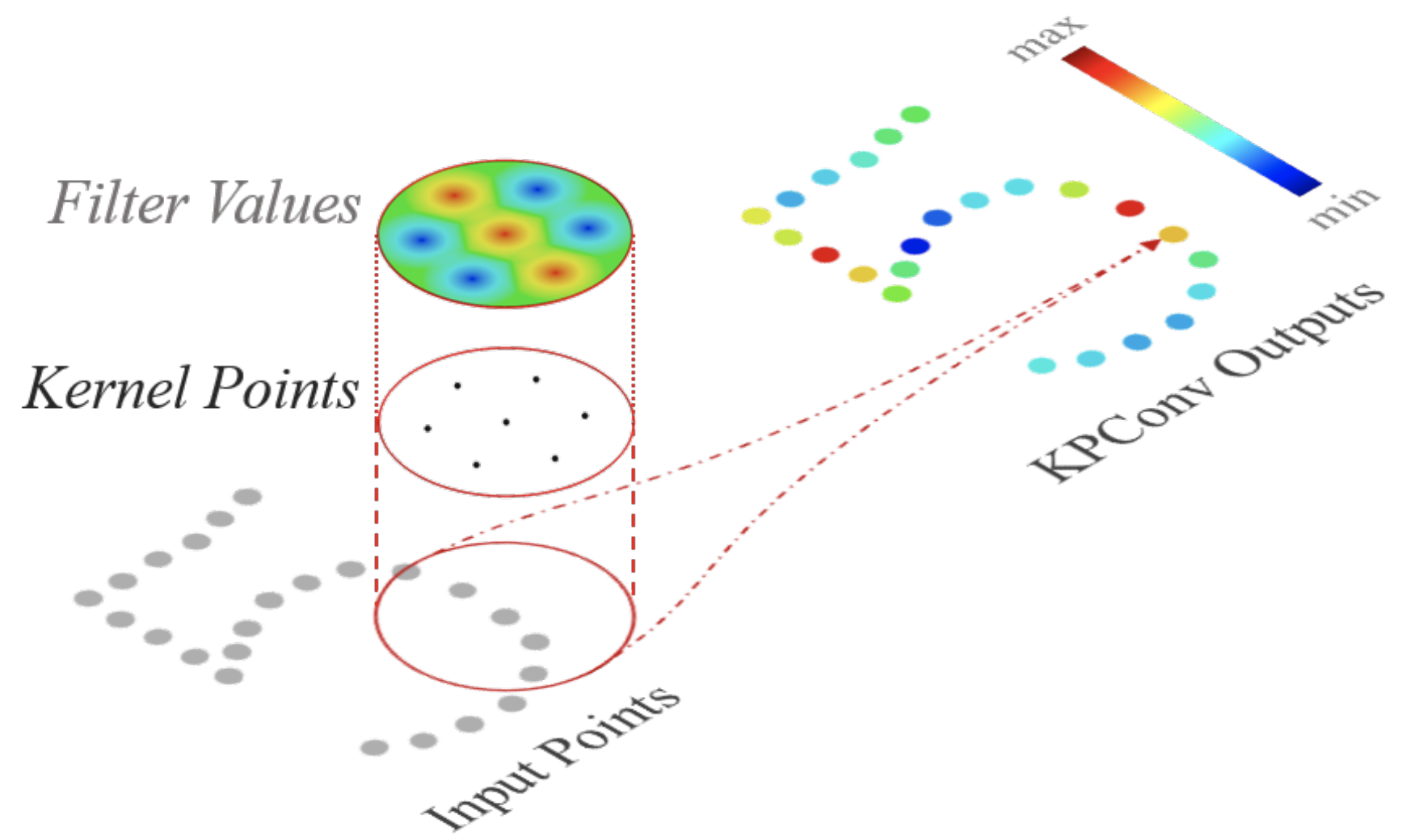

Hugues Thomas, Charles R. Qi, Jean-Emmanuel Deschaud, Beatriz Marcotegui, Francois Goulette, Leonidas J. Guibas

ICCV 2019

paper / bibtex / code

|

|

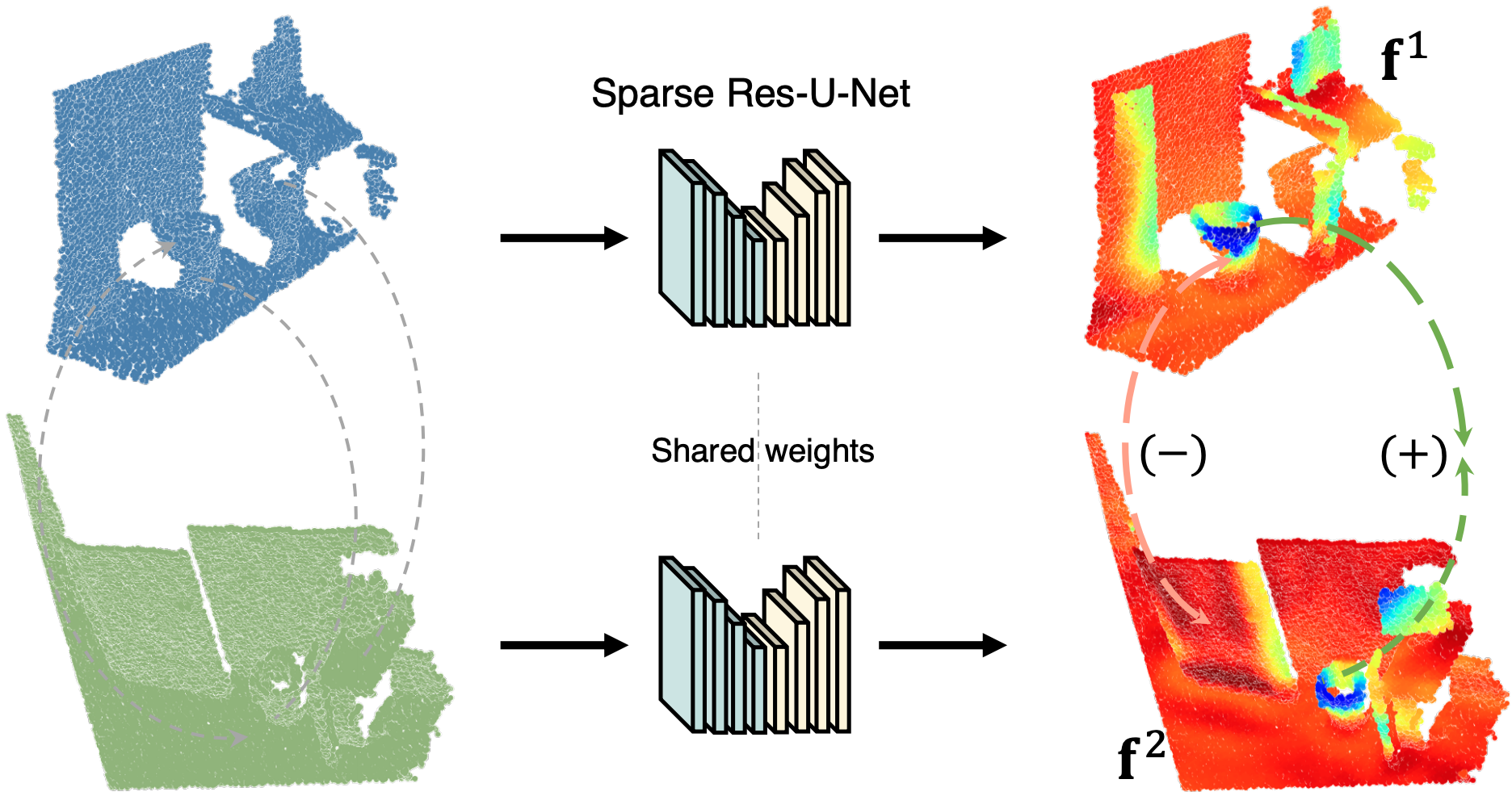

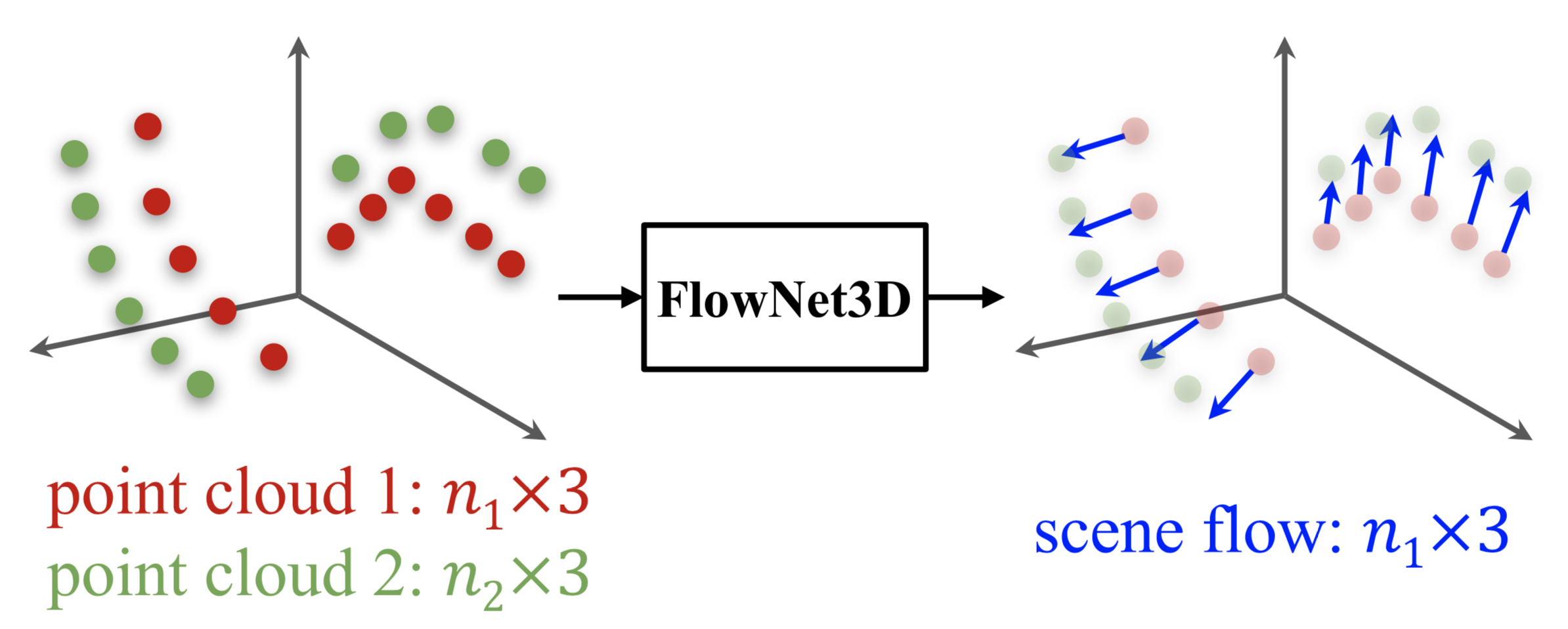

Xingyu Liu*, Charles R. Qi*, Leonidas Guibas (*: equal contribution)

CVPR 2019

paper / bibtex / code

|

|

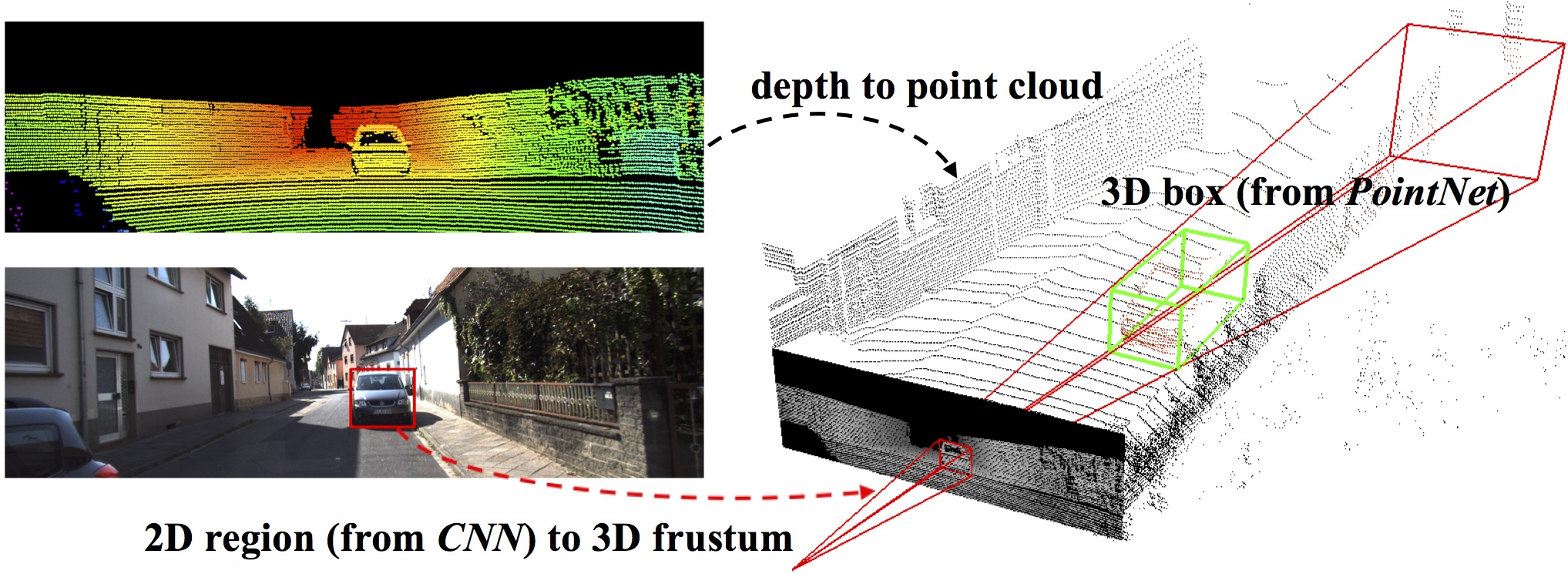

Charles R. Qi, Wei Liu, Chenxia Wu, Hao Su, and Leonidas J. Guibas

CVPR 2018

Our method is simple, efficient and effective, ranking at first place for KITTI 3D object detection benchmark on all categories (11/27/2017). paper / bibtex / code / website |

|

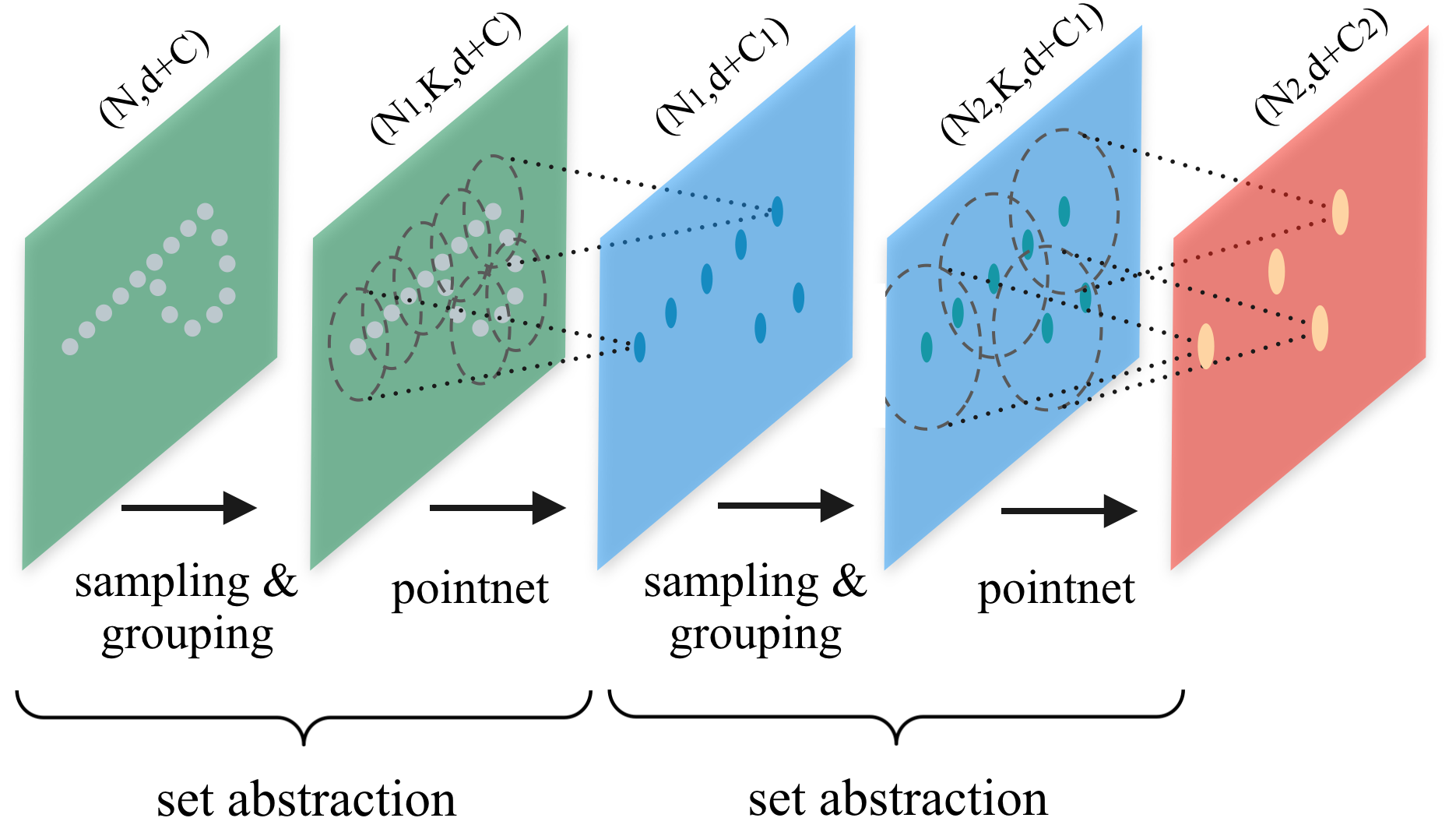

Charles R. Qi, Li Yi, Hao Su, and Leonidas J. Guibas

NIPS 2017

paper / bibtex / code / website / poster

|

|

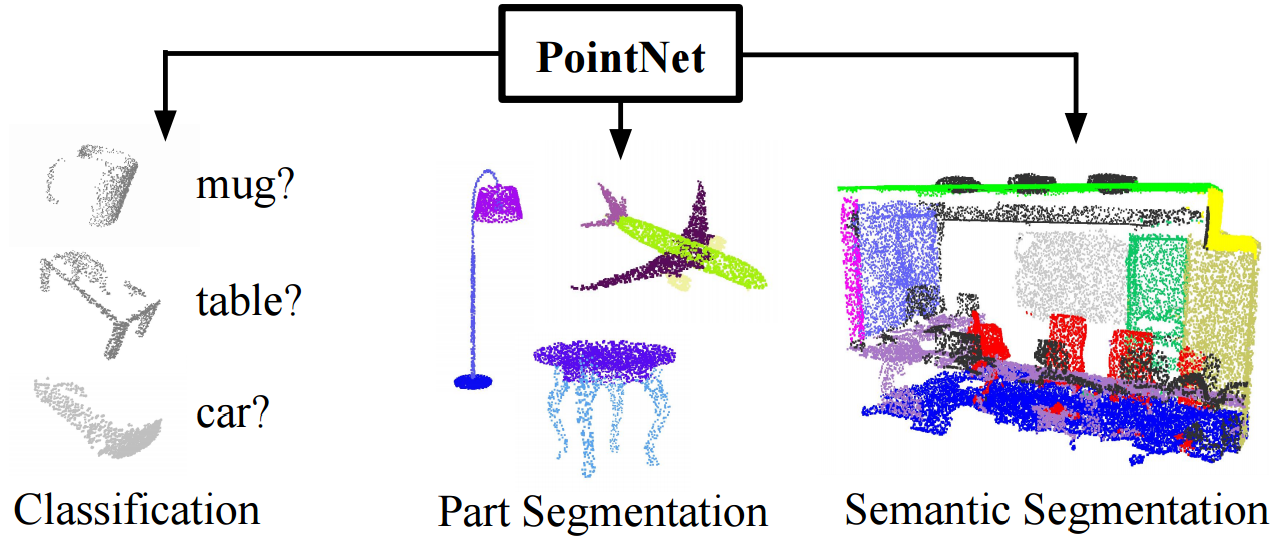

Charles R. Qi*, Hao Su*, Kaichun Mo, and Leonidas J. Guibas (*: equal contribution)

CVPR 2017, Oral Presentation

paper / bibtex / code / website / presentation video

|

|

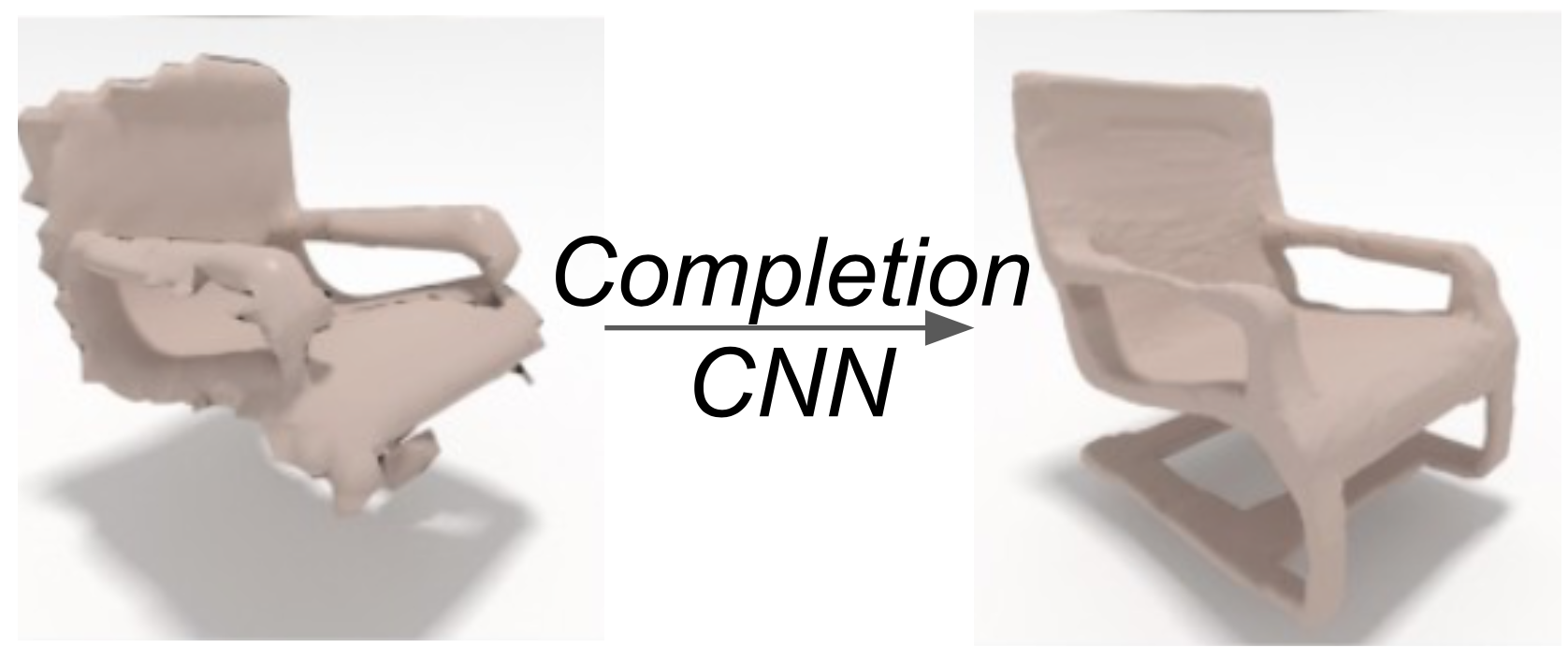

Angela Dai, Charles R. Qi, Matthias Niessner

CVPR 2017, Spotlight Presentation

paper / bibtex / website (code & data available)

|

|

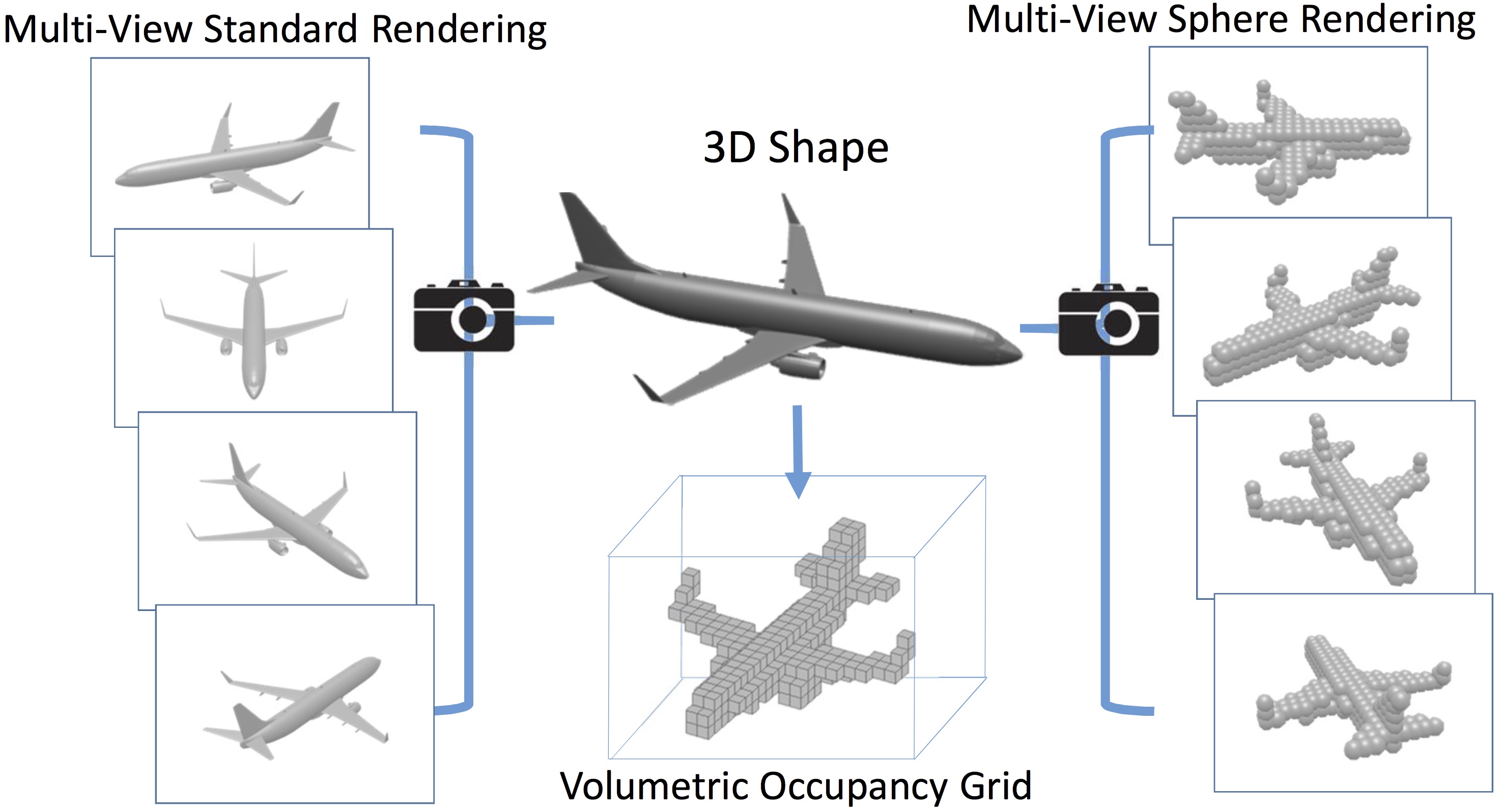

Charles R. Qi*, Hao Su*, Matthias Niessner, Angela Dai, Mengyuan Yan, and Leonidas J. Guibas (*: equal contribution)

CVPR 2016,Spotlight Presentation

paper / bibtex / code / website / supp / presentation video

|

|

Yangyan Li*, Hao Su*, Charles R. Qi, Noa Fish, Daniel Cohen-Or, and Leonidas J. Guibas (*: equal contribution)

SIGGRAPH Asia 2015

paper / bibtex / code / website / live demo

|

|

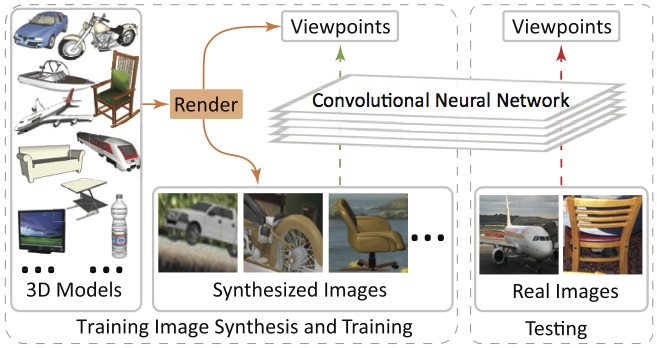

Hao Su*, Charles R. Qi*, Yangyan Li, Leonidas J. Guibas (*equal contribution)

ICCV 2015, Oral Presentation

paper / bibtex / code / website / presentation video

|

- Invited Speaker. 3D Perception from Multi-X. 2023.

- Invited Speaker. Offboard Perception for Autonomous Driving. 2021.

- Invited Speaker and organizer. Deep Learning on Point Cloud and Other 3D Forms. 3D Deep Learning Tutorial at CVPR 2017, Honolulu. [video]

- Guest Lecturer. 3D Object Detection: The History, Present and Future. 2021. CSE219: Machine Learning Meets Geometry, UC San Diego. [slides]

- Invited Speaker. 3D Object Recognition in Point Clouds. 2020. Stanford Vision and Learning Lab [slides]

- (中文) Invited Speaker. The Development and Future of 3D Object Detection. 2021. Shenlan Xueyuan. [video]

- (中文) Invited Speaker. Frustum PointNets for 3D Object Detection from RGB-D Data. 2019. GAMES Webinar Series 82. [video] [slides]

- (中文) Invited Speaker. Deep Hough Voting for 3D Object Detection in Point Clouds. 2019. GAMES Webinar Series 121. [video] [slides]

- (中文) Invited Speaker. Deep Learning on Point Clouds for 3D Scene Understanding. 2018. Jiangmen TechBeat. [video] [slides]

- Guest Lecturer. Spring 2017-18: The Shape of Data: Geometric and Topological Data Analysis at Stanford University.

-

Guest Lecturer. Spring 2016-17: Machine Learning for 3D Data at Stanford University.